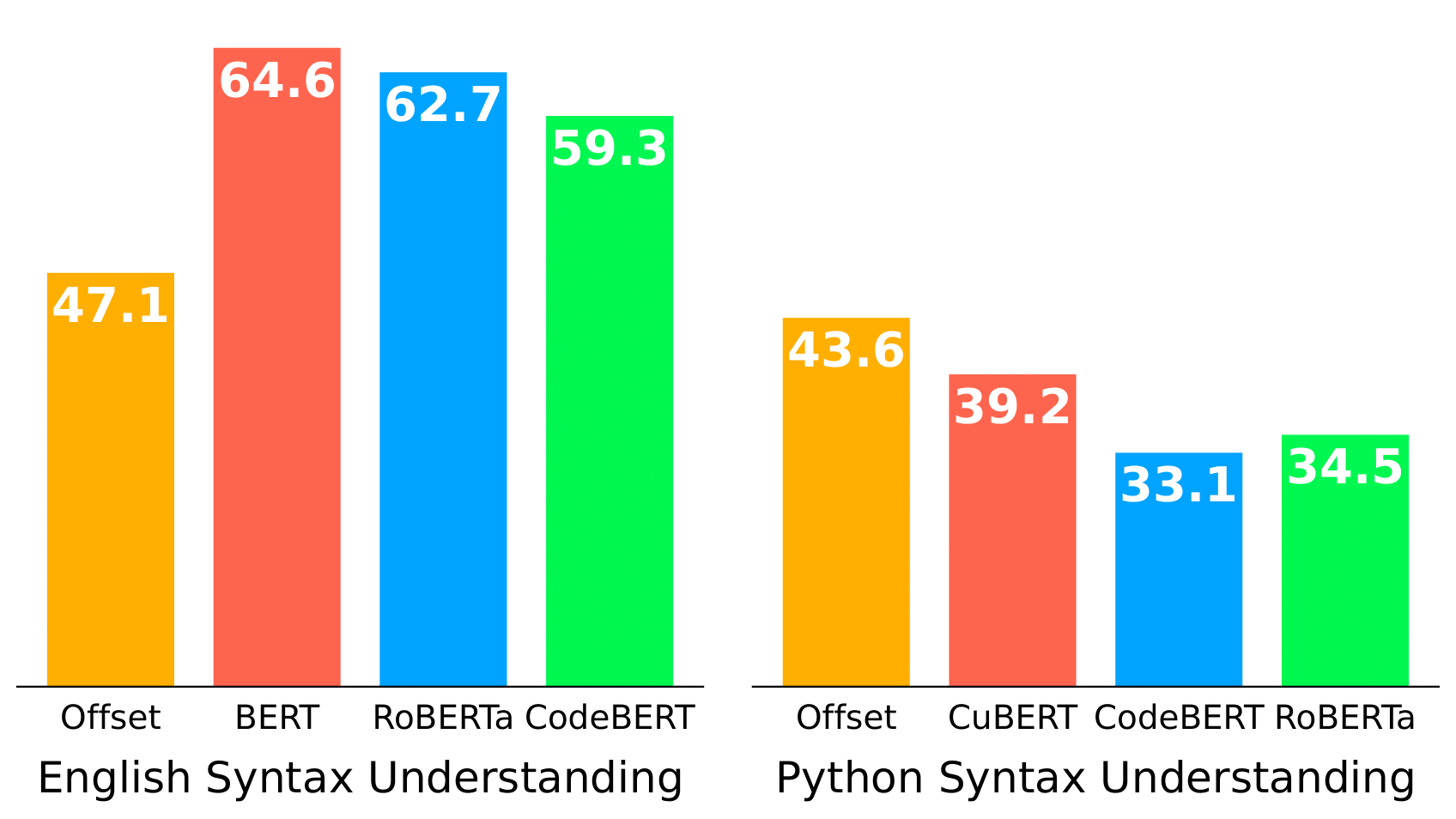

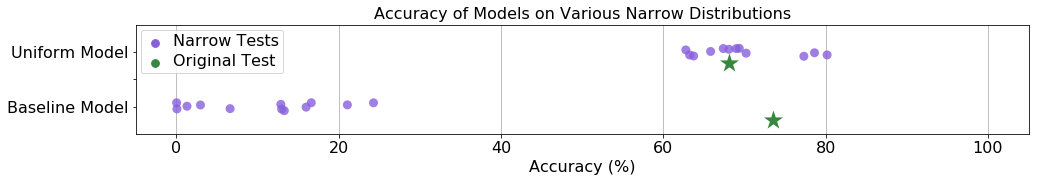

Benchmarking Language Models for Code Syntax Understanding

Da Shen, Xinyun Chen, Chenguang Wang, Koushik Sen, Dawn Song.

Findings of Conference on Empirical Methods in Natural Language Processing (EMNLP Findings). December, 2022.

|

Benchmarking Language Models for Code Syntax Understanding Da Shen, Xinyun Chen, Chenguang Wang, Koushik Sen, Dawn Song. Findings of Conference on Empirical Methods in Natural Language Processing (EMNLP Findings). December, 2022.

|

|

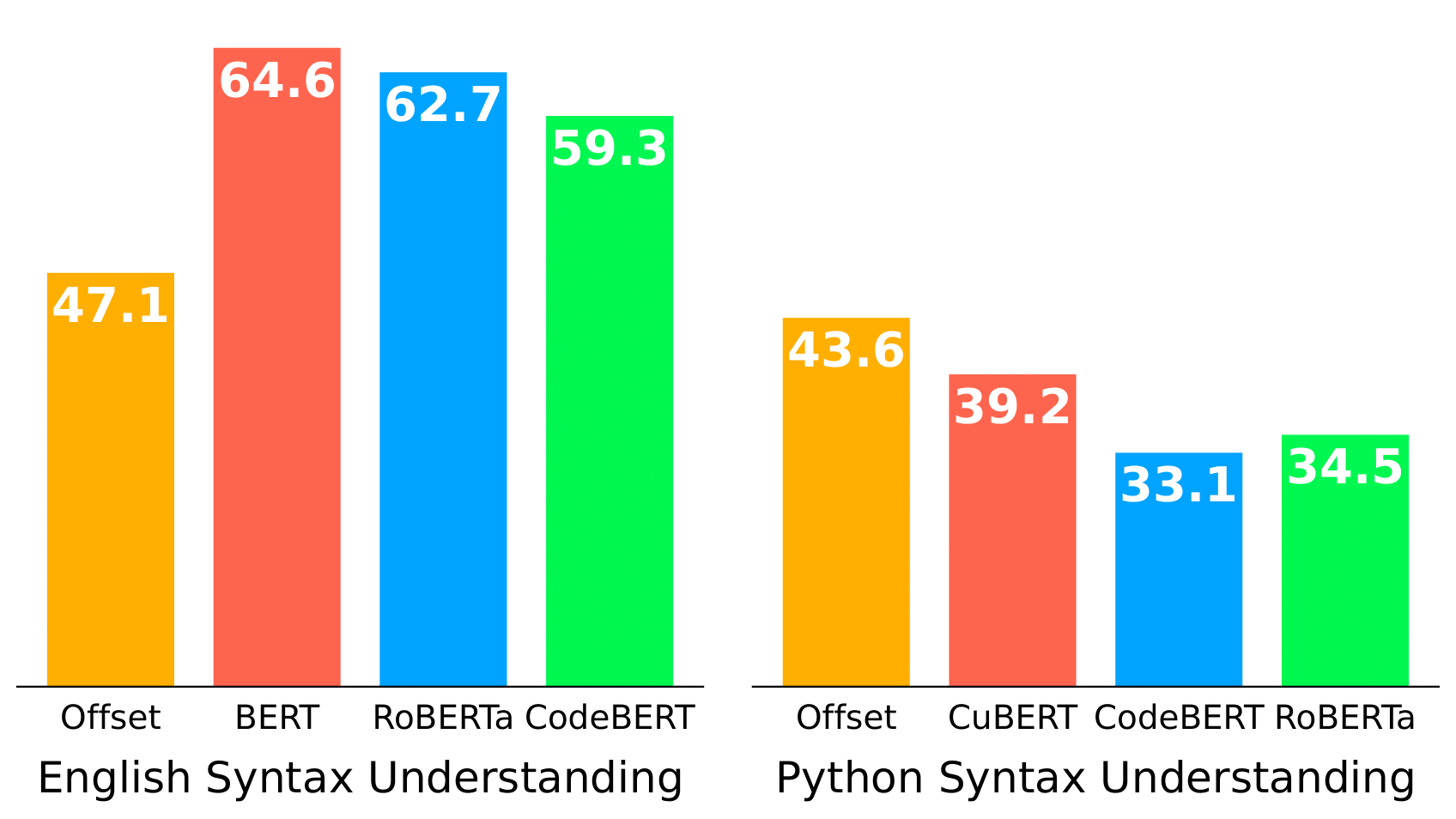

Latent Execution for Neural Program Synthesis Beyond Domain-Specific Languages Xinyun Chen, Dawn Song, Yuandong Tian. Advances in Neural Information Processing Systems (NeurIPS). December, 2021.

|

|

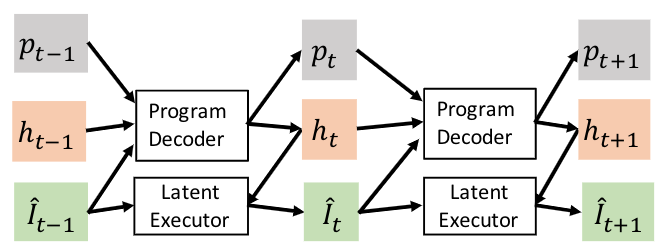

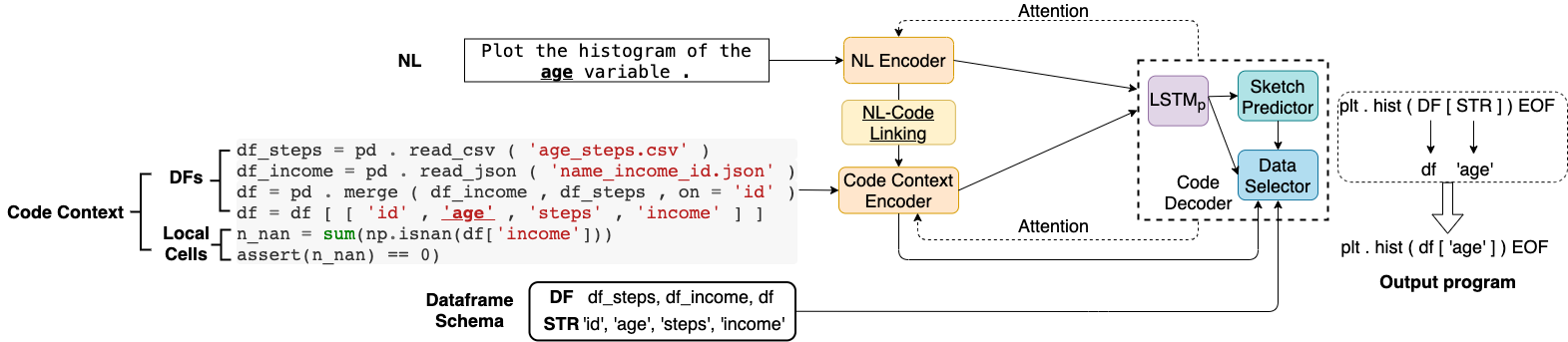

PlotCoder: Hierarchical Decoding for Synthesizing Visualization Code in Programmatic Context Xinyun Chen, Linyuan Gong, Alvin Cheung, Dawn Song. Annual Meeting of the Association for Computational Linguistics (ACL). August, 2021.

|

|

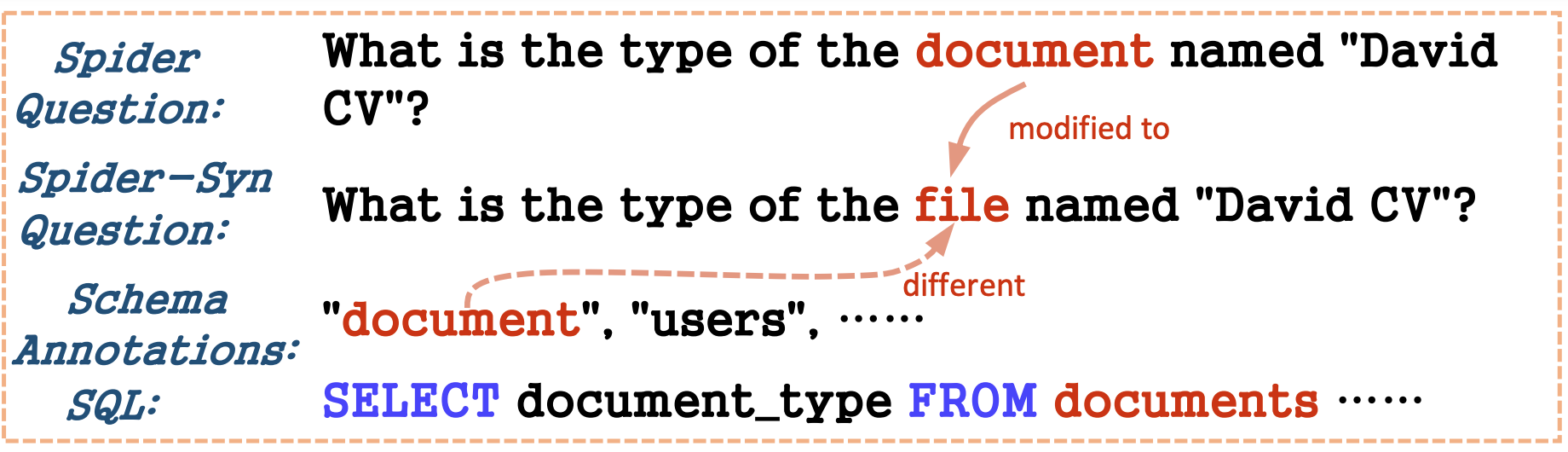

Towards Robustness of Text-to-SQL Models against Synonym Substitution Yujian Gan, Xinyun Chen, Qiuping Huang, Matthew Purver, John R. Woodward, Jinxia Xie, Pengsheng Huang. Annual Meeting of the Association for Computational Linguistics (ACL). August, 2021.

|

|

SpreadsheetCoder: Formula Prediction from Semi-structured Context Xinyun Chen, Petros Maniatis, Rishabh Singh, Charles Sutton, Hanjun Dai, Max Lin, Denny Zhou. International Conference on Machine Learning (ICML). July, 2021.

|

|

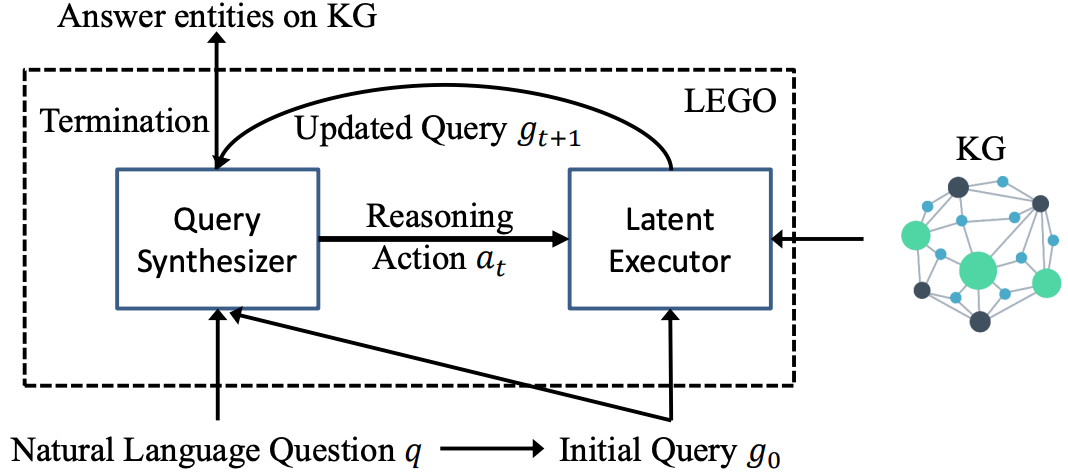

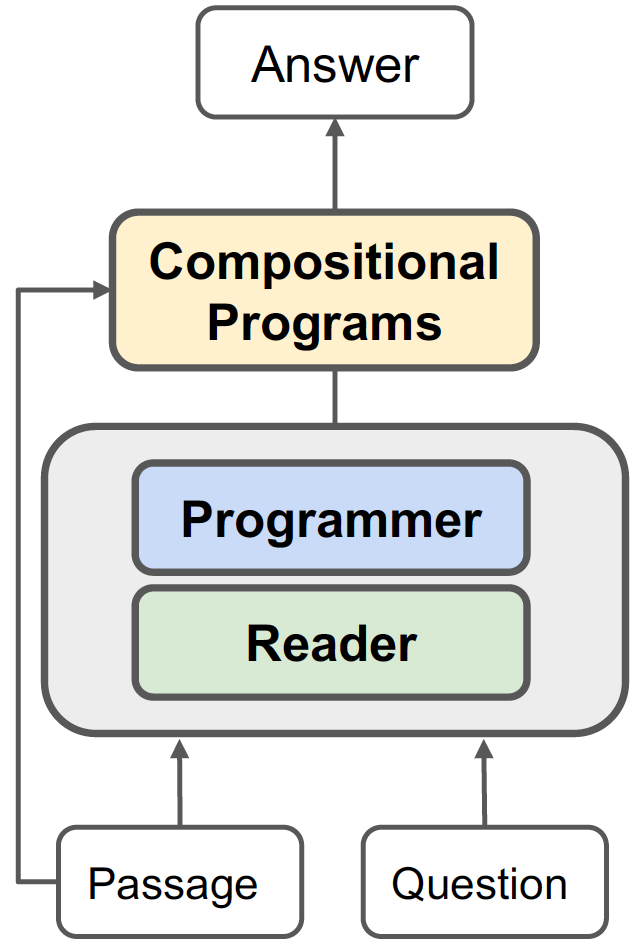

LEGO: Latent Execution-Guided Reasoning for Multi-Hop Question Answering on Knowledge Graphs Hongyu Ren, Hanjun Dai, Bo Dai, Xinyun Chen, Michihiro Yasunaga, Haitian Sun, Dale Schuurmans, Jure Leskovec, Denny Zhou. International Conference on Machine Learning (ICML). July, 2021.

|

|

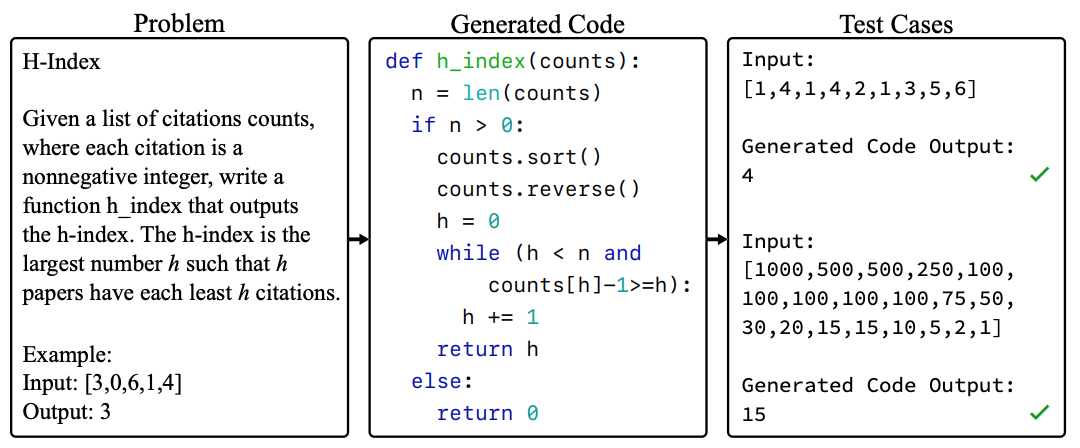

Measuring Coding Challenge Competence With APPS Dan Hendrycks*, Steven Basart*, Saurav Kadavath, Mantas Mazeika, Akul Arora, Ethan Guo, Collin Burns, Samir Puranik, Horace He, Dawn Song, Jacob Steinhardt. May, 2021.

|

|

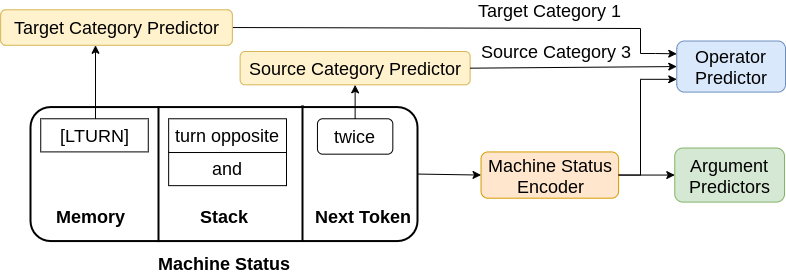

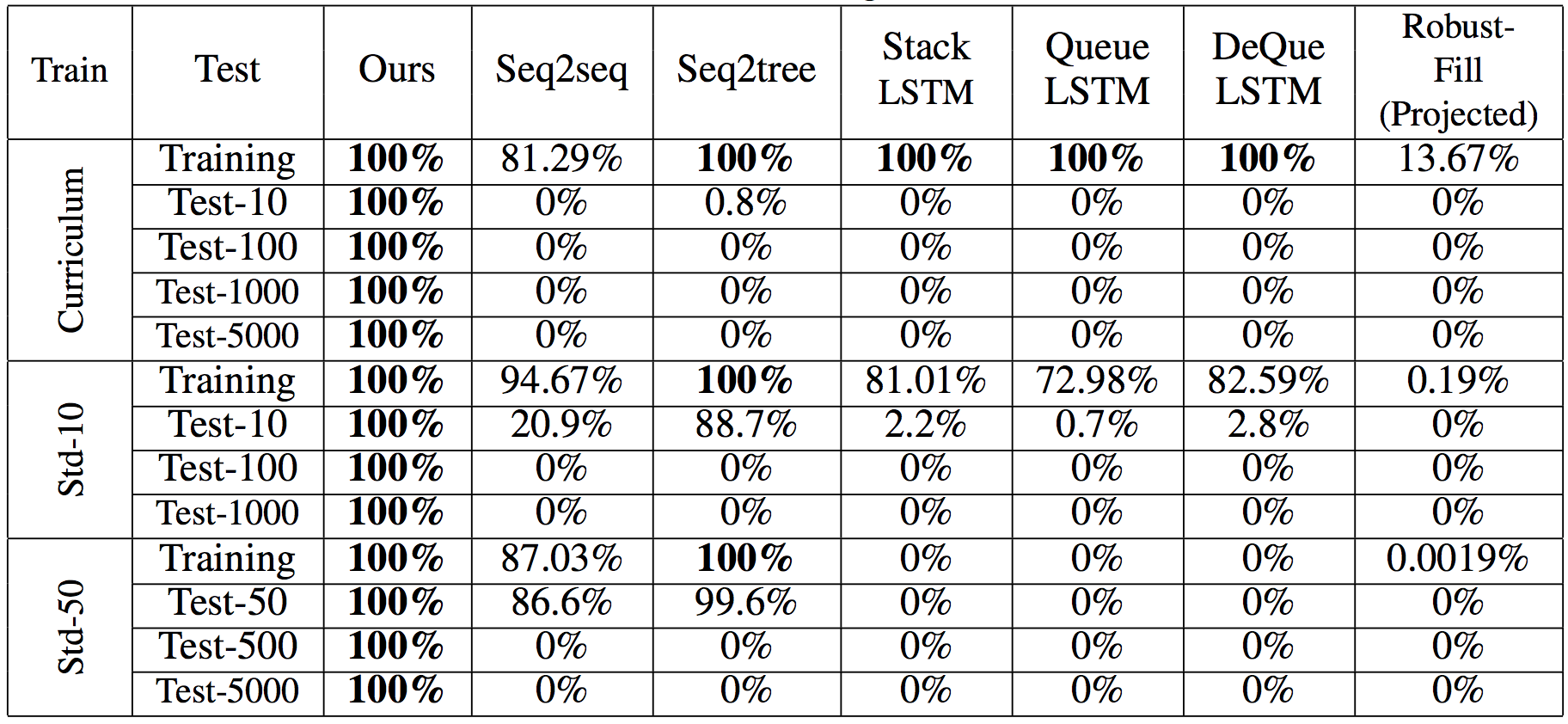

Compositional Generalization via Neural-Symbolic Stack Machines Xinyun Chen, Chen Liang, Adams Wei Yu, Dawn Song, Denny Zhou. Advances in Neural Information Processing Systems (NeurIPS). December, 2020.

|

|

Synthesize, Execute and Debug: Learning to Repair for Neural Program Synthesis Kavi Gupta, Peter Ebert Christensen*, Xinyun Chen*, Dawn Song. Advances in Neural Information Processing Systems (NeurIPS). December, 2020.

|

|

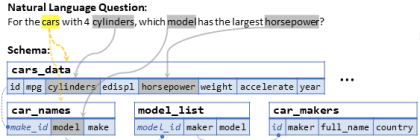

RAT-SQL: Relation-Aware Schema Encoding and Linking for Text-to-SQL Parsers Bailin Wang*, Richard Shin*, Xiaodong Liu, Oleksandr Polozov, Matthew Richardson. Annual Meeting of the Association for Computational Linguistics (ACL). July, 2020.

|

|

Xinyun Chen, Chen Liang, Adams Wei Yu, Denny Zhou, Dawn Song, Quoc V. Le. International Conference on Learning Representations (ICLR). May, 2020.

|

|

Deep Symbolic Superoptimization Without Human Knowledge Hui Shi, Yang Zhang, Xinyun Chen, Yuandong Tian, Jishen Zhao. International Conference on Learning Representations (ICLR). May, 2020.

|

|

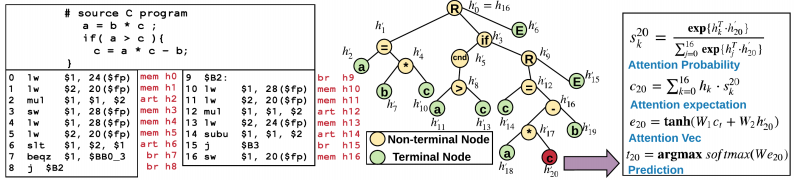

Coda: An End-to-End Neural Program Decompiler Cheng Fu, Huili Chen, Haolan Liu, Xinyun Chen, Yuandong Tian, Farinaz Koushanfar, Jishen Zhao. Advances in Neural Information Processing Systems (NeurIPS). December, 2019.

|

|

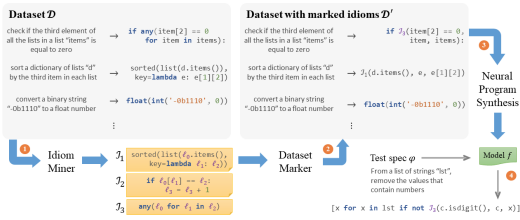

Program Synthesis and Semantic Parsing with Learned Code Idioms Richard Shin, Marc Brockschmidt, Militadis Allamanis, Oleksandr Polozov. Advances in Neural Information Processing Systems (NeurIPS). December, 2019.

|

|

Execution-Guided Neural Program Synthesis Xinyun Chen, Chang Liu, Dawn Song. International Conference on Learning Representations (ICLR). May, 2019.

|

|

Synthetic Datasets for Neural Program Synthesis Richard Shin, Neel Kant, Kavi Gupta, Chris Bender, Brandon Trabucco, Rishabh Singh, Dawn Song. International Conference on Learning Representations (ICLR). May, 2019.

|

|

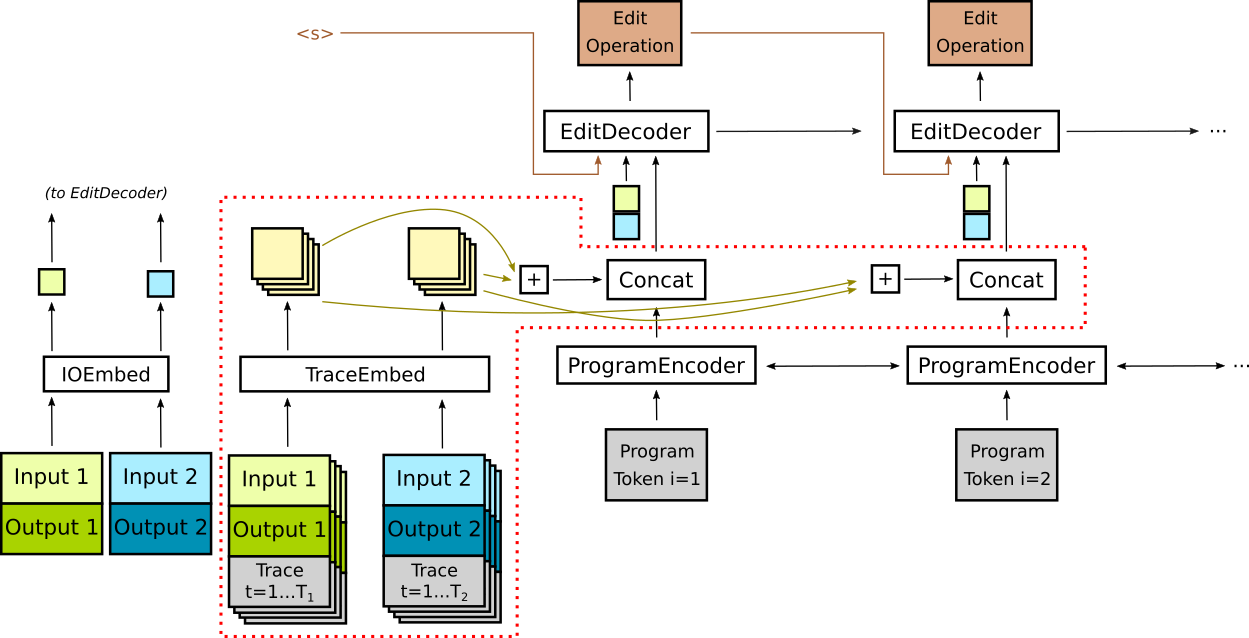

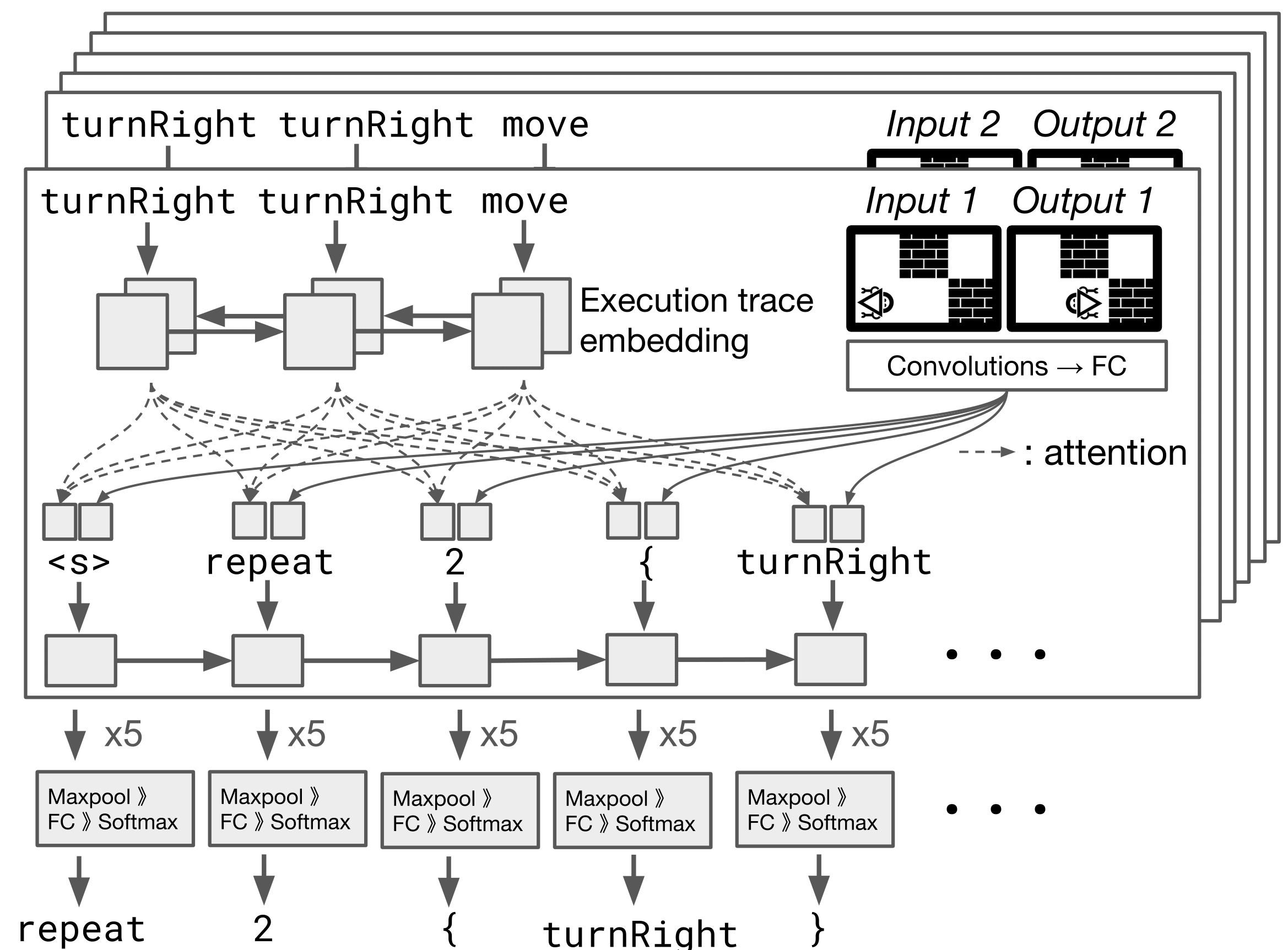

Improving Neural Program Synthesis with Inferred Execution Traces Richard Shin, Illia Polosukhin, Dawn Song. Advances in Neural Information Processing Systems (NIPS). December, 2018.

|

|

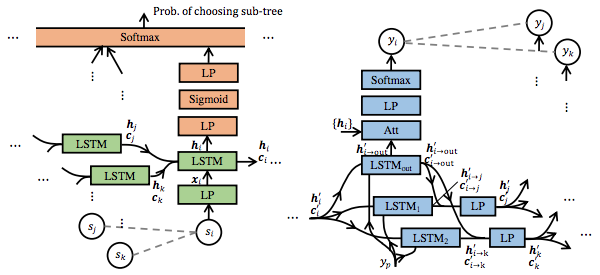

Tree-to-tree Neural Networks for Program Translation Xinyun Chen, Chang Liu, Dawn Song. Advances in Neural Information Processing Systems (NIPS). December, 2018.

|

|

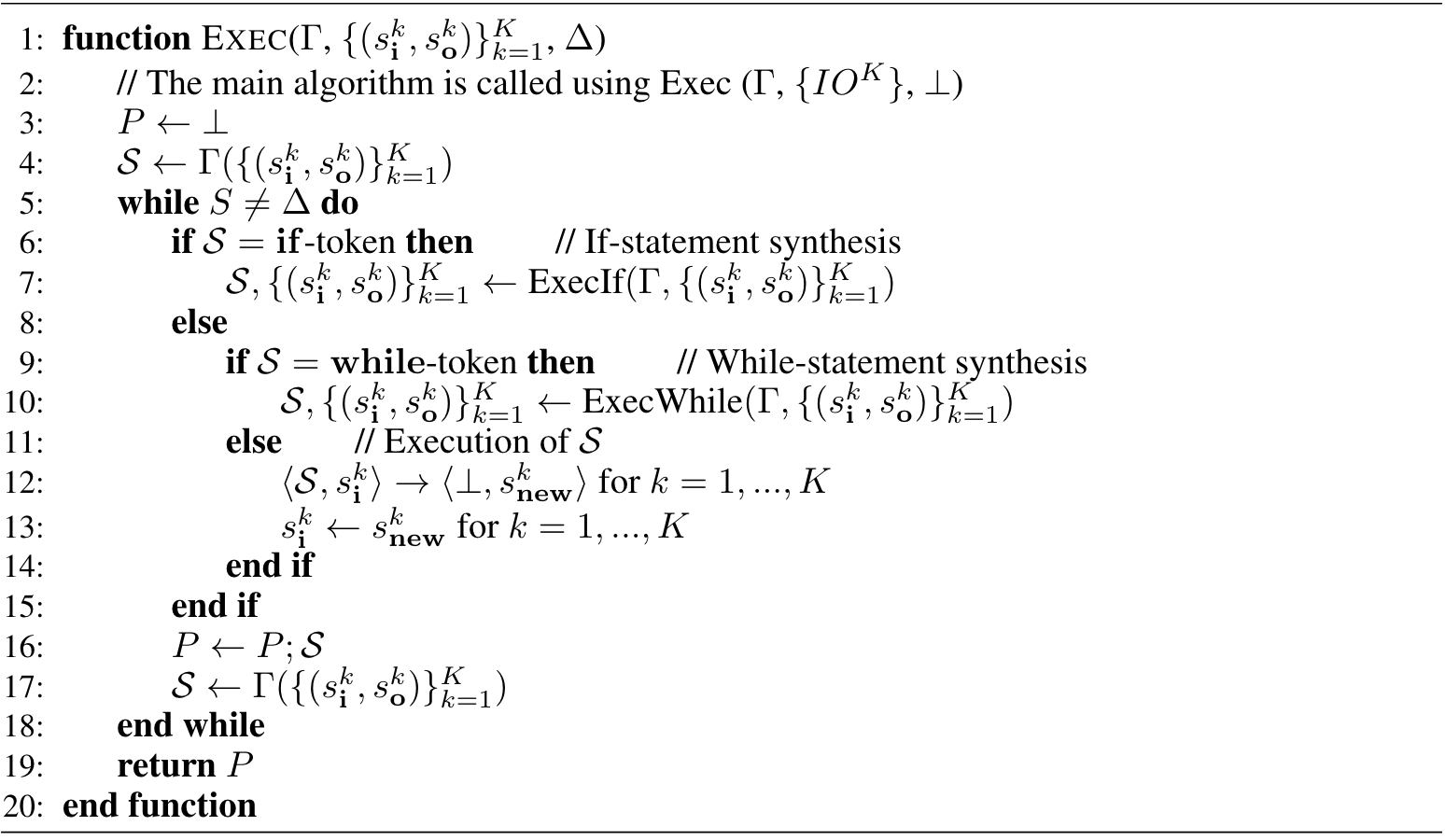

Towards Synthesizing Complex Programs from Input-Output Examples Xinyun Chen, Chang Liu, Dawn Song. International Conference on Learning Representations (ICLR). May, 2018.

|

|

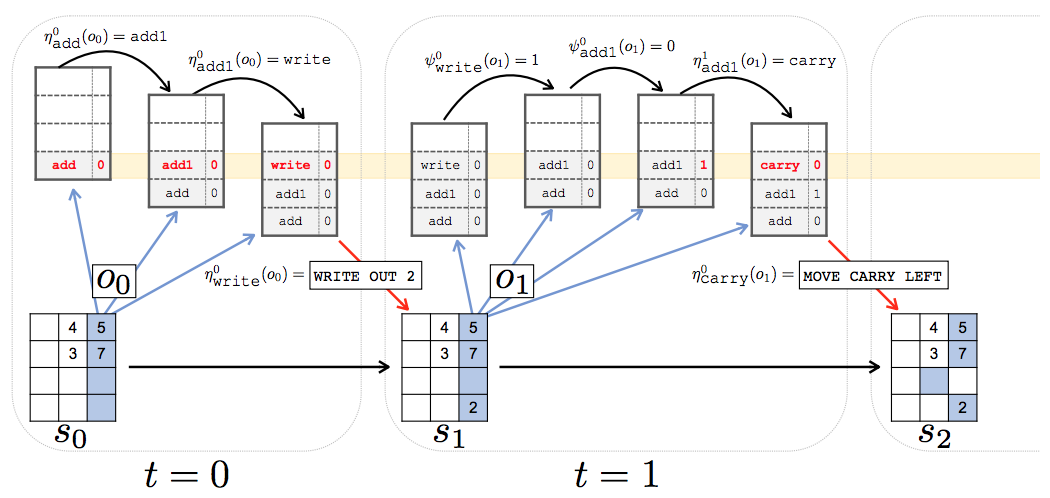

Parametrized Hierarchical Procedures for Neural Programming Roy Fox, Richard Shin, Sanjay Krishnan, Ken Goldberg, Dawn Song, Ion Stoica. International Conference on Learning Representations (ICLR). May, 2018.

|

|

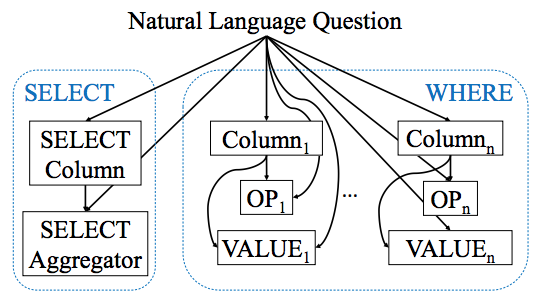

SQLNet: Generating Structured Queries From Natural Language Without Reinforcement Learning Xiaojun Xu, Chang Liu, Dawn Song. November, 2017.

|

|

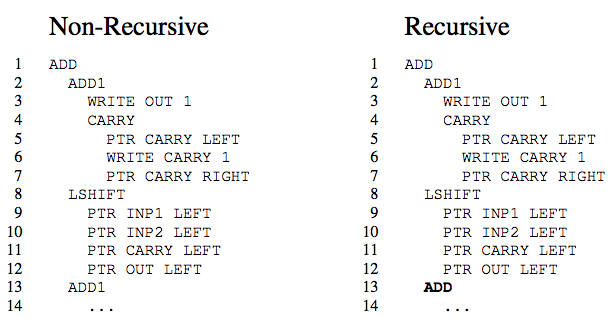

Making Neural Programming Architectures Generalize via Recursion Jonathon Cai, Richard Shin, Dawn Song. International Conference on Learning Representations (ICLR). April, 2017. Best Paper Award

|

|

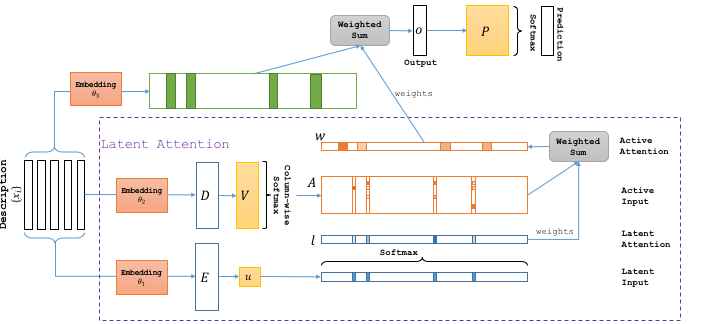

Latent Attention For If-Then Program Synthesis Xinyun Chen, Chang Liu, Richard Shin, Dawn Song, Mingcheng Chen. Advances in Neural Information Processing Systems (NIPS). December, 2016.

|

Faculty: Dawn Song

Postdocs:

Ph.D. Students: