Gallery of attack images: Clarifai's Moderation and NSFW Models

All of the original images are either sample images from Clarifai or were found on the internet under various Creative Commons licenses which allow non-commercial reuse.

All adversarial images were generated using the Iterative Gradient Estimation attack with query reduction using random grouping. The number of iterations was set to 5 and the perturbation value to 16 for all images, except where otherwise indicated. The size of the random group was modified appropriately for each image to keep the number of queries low, while still allowing for the generation of adversarial images. In all image galleries, the original images appear first, immediately followed by their adversarial variants.

Attacks on the Moderation model

The Moderation model hosted by Clarifai has 5 classes: ‘safe’, ‘suggestive’, ‘explicit’, ‘drug’ and ‘gore’.

Images of gore

The images on the left were both originally classified as ‘gore’ by the Moderation model with a confidence of 1.0. The adversarial image corresponding to the first image is classified as ‘safe’ with a confidence of 0.69 while the second adversarial image is classified as ‘safe’ with a confidence of 0.55. The adversarial images need 110 and 200 queries to the model respectively and can be generated in under a minute.

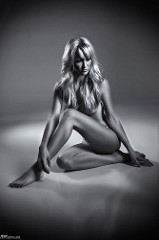

Suggestive images

The images below were originally classified as ‘suggestive’ by the Moderation model with a confidence of 0.72 (top, first image)and 0.99 (bottom, left image) respectively. The corresponding adversarial images (top, second image and bottom, right image) were classified as safe with a confidence of 0.58 and 0.79 respectively. The first adversarial image needed 810 queries while the second needed 1150 queries. These images took around 10 minutes each to generate.

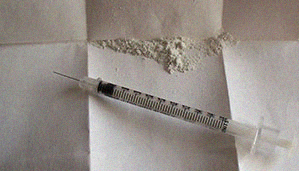

Images of drugs

The images on the left below were originally classified as ‘drug’ by the Moderation model with confidences (from top to bottom) of 1.000, 0.999, 0.987, 0.586, and 1.000. The adversarial images on the right were classified (from top to bottom) as safe (with confidence 0.769), safe (0.549), explicit (0.525), and safe (0.759). The adversarial images were generated with 197 queries each.

Attacks on the NSFW classification model

The NSFW model hosted by Clarifai has just 2 classes: ‘sfw’ and ‘nsfw’. The first image (topmost) is classified as ‘nsfw’ with a confidence of 0.83 by the NSFW model while the second image (third from top) is classified as ‘nsfw’ with a confidence of 0.85. The corresponding adversarial images are classifed as ‘sfw’ with confidences of 0.65 for the first image (second from top) and 0.59 for the second image (bottom). The adversarial images needed 1400 and 810 queries respectively. These images also took about 10 minutes to generate.